By Annabelle King October 10, 2025

In today’s digital economy, protecting customer payment data is no longer optional — it’s essential. Every time a consumer enters their credit card number, expiration date, or CVV into an e-commerce site, mobile app, or point-of-sale device, sensitive data is transferred through multiple systems.

Cybercriminals continuously evolve methods to intercept or steal this data, leading to fraud, identity theft, and loss of trust. Tokenization is one of the most effective tools in the security toolkit to safeguard such data.

In simple terms, tokenization protects customer payment data by replacing the real, sensitive values (like the card number) with a meaningless surrogate or “token” that cannot be exploited if intercepted.

When properly implemented, tokenization ensures that the merchant or intermediary handling the payment never stores or transmits the actual card details, drastically reducing the surface of attack.

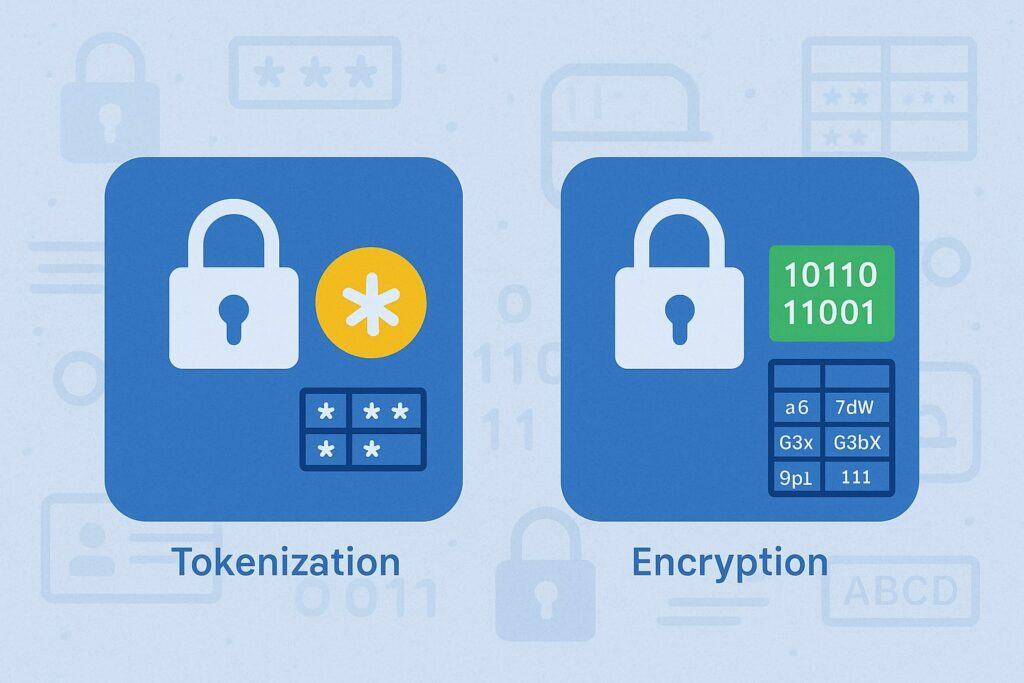

Unlike encryption, where data is scrambled but still potentially reversible, tokenization creates an irreversible substitute that holds no intrinsic meaning. In this way, tokenization protects customer payment data by making a breach far less damaging and reducing the burden of regulatory compliance.

In this article, we explore in depth how tokenization protects customer payment data: its mechanisms, benefits, challenges, architecture, best practices, comparisons with encryption, real-world use cases, and how businesses can adopt it.

We also address common questions and misconceptions. By the end, you should have a clear, updated guide on using tokenization as a robust shield for payment data.

What Is Tokenization in Payment Security?

To understand how tokenization protects customer payment data, the first step is to define what tokenization means in this context, and how it differs from other data-protection methods.

Definition and Fundamental Concept

Tokenization is the process that replaces a sensitive data element (for example, a credit card number or Primary Account Number, PAN) with a non-sensitive surrogate value known as a token.

The token is a randomly generated string (or equivalently generated via a reversible mapping under strict control) that has no exploitable intrinsic value or pattern outside its intended context.

The original data is stored securely in a token vault or secure environment, and only authorized systems can map (or “de-tokenize”) the token back to its original value.

In simpler terms: when a customer submits payment information, the system exchanges (or “tokenizes”) the real information into a meaningless token. All later internal systems operate on the token, not the real data. Even if a hacker intercepts or steals the token, it is worthless without access to the vault system or mapping logic.

This design ensures that the systems handling payments never possess or store the full card data, thus dramatically reducing risk. As Wikipedia describes, tokens are “non-sensitive equivalents” that map back to the sensitive data via secure systems, but are infeasible to reverse-engineer without that system.

Token Vault and Mapping Architecture

An essential component of tokenization is the token vault (sometimes also called the tokenization server or token service).

This vault holds the mapping between the token and the original sensitive data, typically protected by strong encryption, strict access controls, logging, and segmentation. Only the tokenization system can resolve (or detokenize) a token back into the original data under controlled conditions.

The architecture often separates the token vault from all other systems. All front-end systems (merchant servers, payment gateways, internal databases) operate using tokens. Only a minimal, highly secured layer (often a third-party service) ever sees or touches real payment data.

Because of this separation, even if a merchant’s database is breached, attackers only obtain tokens—not real PANs or CVVs. Tokens, by themselves, are useless for fraud. This is how tokenization protects customer payment data at rest and in transit.

Types of Tokens: High-Value vs. Low-Value, Single-use vs. Multi-use

Tokens vary depending on usage and design. Some common distinctions include:

- High-Value Tokens (HVTs): These tokens can act as full surrogates for the original PAN and may be used to process future payments.

They often mimic the format of the original data (same length, structure) so systems accepting tokens don’t require heavy modifications. HVTs are often network- or merchant-specific and can be bound to specific merchants or devices. - Low-Value Tokens (LVTs): These are more restricted, often used for internal reference or partial replacement, and cannot themselves authorize transactions. They’re more limited in scope.

- Single-use tokens: Valid only for one transaction. Once consumed, they become useless.

- Multi-use tokens: Can be reused across multiple transactions (for example, for recurring billing or one-click checkout) but still do not expose the real card data.

Choosing between these types depends on use case (e.g. recurring billing, refunds, device binding) and risk profile.

Tokenization vs. Encryption: Key Distinctions

Although both tokenization and encryption are data protection techniques, they differ fundamentally:

- Encryption uses mathematical algorithms to scramble data, which can be reversed via decryption using a key. The encrypted data remains a usable, albeit scrambled, form of the original.

If attackers gain the key or the algorithm is weak, the data can be recovered. In contrast, tokenization replaces the data completely — no mathematical transform, but substitution. The token is not reversible unless through the token vault. (Hence “nonreversible”). - Encryption is resource-intensive (computational cost), especially for high-volume transactions. Tokenization is generally lighter because tokens are simple references.

- Tokenization reduces PCI DSS (Payment Card Industry Data Security Standard) scope for merchants. Because the systems store only tokens, not actual card data, many controls required by PCI may no longer apply to those systems.

- Encryption protects data at rest and in transit, but if decrypted inside a system, that data can be exposed. Tokenization protects both by limiting exposure points.

A good strategy is often to combine tokenization and encryption: encrypt data in transit (TLS) and once inside the secure environment, tokenize it for internal usage. This paired approach gives layered protection.

By understanding these foundational principles, we see clearly how tokenization protects customer payment data: by replacing sensitive information with decoy tokens, restricting exposure, and limiting the value of any captured data.

How Tokenization Protects Payment Data During Transactions

Having defined tokenization, we now delve into the detailed flow of how tokenization protects customer payment data during each phase of a transaction—collection, token generation, transmission, storage, and reconciliation.

Step-by-Step Workflow of Payment Tokenization

Here is a typical tokenization workflow in a payment transaction:

- Data Entry / Collection: The customer enters card data (PAN, expiry date, CVV) on a merchant website, mobile app, or payment terminal (POS).

- Secure Transmission to Token Service: Immediately, the sensitive data is routed (often via TLS/SSL) to a tokenization service (often provided by a payment gateway or third-party token provider). The merchant’s systems bypass storing or handling raw card data.

- Token Generation / Issuance: The tokenization service generates a unique token corresponding to the card data. It may use randomization, cryptographic functions, or mapping techniques. That token is returned to the merchant’s system.

- Token Storage & Use: The merchant stores or uses the token for future transactions or references. For instance, in subscription billing, the merchant uses the token to charge the customer later—without ever touching the real card.

- Payment Processing: During the actual payment authorization, the token is mapped (by the token provider) to the original card data behind the scenes and forwarded to the card network / issuer for approval. The merchant remains oblivious to the real card data.

- Settlement / Reconciliation / Refunds: For operations like refunds or chargebacks, the token serves as a reference. If more data is needed, a controlled de-tokenization process occurs within the token vault, not on merchant systems.

Because only the token circulates through the merchant’s environment, the actual card credentials remain protected in the vault.

Protecting Data in Transit and at Rest

A tokenization approach is most effective when paired with strong protections for data in transit — that is, when card data is first sent from client to the tokenization engine. Typically, secure transport protocols like TLS (version 1.2 or above) are used to guard against interception or man-in-the-middle attacks.

Once data reaches the tokenization system, it’s either immediately converted or stored in a highly secured, isolated vault. The merchant’s systems never hold raw card data “at rest,” only tokens, meaning breach of a merchant database cannot expose real card numbers.

Thus, tokenization protects customer payment data both during transit and in storage by restricting where the original data lives and how it’s used.

Limiting Exposure: Attack Surface Reduction

One of the strongest benefits of tokenization is that it dramatically reduces the attack surface. Without tokenization, a merchant’s web servers, database servers, internal applications, APIs—all can become prime targets, because each might handle sensitive card data.

But with tokenization, most of those systems never see card numbers at all—they interact only with tokens.

Even if a hacker breaches the merchant’s infrastructure, they retrieve only tokens—which by themselves cannot be used to initiate unauthorized payments. In effect, tokenization compartmentalizes risk and isolates the most valuable data (card details) into one hardened vault.

Scoped PCI Compliance and Liability Reduction

Because tokenization protects customer payment data by removing it from systems, many merchant systems now fall out of scope for PCI DSS requirements.

PCI DSS (the industry standard for handling cardholder data) prescribes strong controls (data access, encryption, monitoring, network segmentation) for systems that store, process, or transmit card data.

If a system handles only tokens, it does not count as storing the actual card data, reducing the number of controls required.

As a result, not only is the technical effort reduced, but the potential liability in the event of a breach is also lessened. Merchants can focus their security efforts on the core tokenization component—outsourcing much of the burden to the token service provider (TSP).

Handling Exceptions: De-tokenization Under Strict Control

Sometimes, a token must be reversed (detokenized)—for example, to investigate a transaction, issue a refund, or reconcile payments.

These operations happen only inside the secure vault environment, under strict audit, role-based access control, and in limited, controlled processes. Detokenization is restricted to authorized personnel or modules, minimizing risk.

In this way, tokenization protects customer payment data not only by limiting who sees the data, but by tightly governing when and how it can ever be accessed.

By carefully orchestrating the workflow, isolating systems, and enforcing strong access policies, tokenization builds a strong, layered shield around customer payment data throughout a transaction’s lifecycle.

Key Benefits: Why Tokenization Protects Customer Payment Data So Effectively

Now that we have the mechanics in place, let’s examine the core benefits, showing how tokenization protects customer payment data in practical, business-meaningful ways.

Fraud and Data Breach Mitigation

A major benefit of tokenization is that it neutralizes the value of stolen data. If a cybercriminal compromises the merchant’s database or intercepts transaction traffic, they obtain only tokens—useless for purchasing or identity theft. This drastically reduces the damage from breaches.

In fact, with tokenization, the stolen token is context-bound: it may only work for a given merchant, device, or time window. That specificity further diminishes usefulness to attackers.

Modern tokenization services may also detect anomalies (e.g. token used by a different merchant) and flag fraudulent activity.

By removing sensitive data from everywhere except the token vault, tokenization significantly reduces the “blast radius” of any breach—thus, tokenization protects customer payment data by making exposure of real data improbable.

Lowering Cost and Complexity of Compliance

As discussed earlier, because tokens are not considered sensitive cardholder data under PCI DSS, merchant infrastructures become simpler to audit and certify.

Many controls related to encryption, key management, database-level encryption, and logging may be eased or removed from scope. This reduces compliance burden, operational cost, and audit risk.

In short: one of the main reasons organizations adopt tokenization is that it helps them meet compliance more easily while still ensuring strong security, because tokenization protects customer payment data by taking that data out of scope.

Seamless Customer Experience & Repeat Use

Customers today expect frictionless checkout experiences—like one-click purchases, stored card preferences, and recurring billing.

Tokenization enables these features securely: the merchant stores (only) a token and can use it for future payments without re-prompting the customer for their card details. This improves usability, reduces friction, and fosters trust—all while protecting cardholder data.

By decoupling the storage of card credentials from merchant systems, tokenization protects customer payment data while preserving convenience.

Device-Based and Contextual Token Binding

Modern tokenization solutions support advanced features like device binding—creating tokens tied to a specific device (such as a user’s smartphone)—and contextual binding (e.g. channel, merchant, time window).

For example, a token generated for merchant A cannot be reused at merchant B, or a token assigned to one mobile device cannot be used elsewhere. This further limits misuse and acts like a second line of defense.

Because each token is tightly scoped, even if a hacker intercepted it, they cannot reuse it elsewhere. That is another concrete way tokenization protects customer payment data through contextual constraints.

Scalability, Performance, and Efficiency

Tokenization is typically lightweight in operation: issuance, storage, or lookup of tokens is low overhead, especially compared to heavy cryptographic operations.

It scales well across high transaction volume systems. Because processing mostly involves token handling — not encryption/decryption — latency is minimized. This helps deliver secure performance at scale.

In summary, tokenization not only strengthens security but does so without compromising speed or scalability. This makes it a practical, performant tool in protecting customer payment data.

Tokenization Architecture & Deployment Models

Understanding architecture and deployment is essential to realize how tokenization protects customer payment data in real systems. Here, we explore typical architectures, deployment models, and variations.

Centralized Token Vault vs. Federated / Distributed Vaults

A common architecture uses a centralized token vault: a single, isolated, highly secured service that maps sensitive data to tokens for all merchants or clients. This simplifies vault management, audit, and update control.

Alternatively, federated or distributed vaults may be deployed across regions or business units to reduce latency or meet data residency requirements. Each local vault might synchronize token-mapping data (or partition responsibilities) in a controlled fashion.

Regardless of vault topology, the core principle remains: token vaults must be strictly isolated and only accessible through hardened, authenticated APIs or services.

In-House vs. Outsourced Tokenization

Organizations can choose to build and operate a tokenization system internally, or use a third-party tokenization service (e.g. via a payment gateway or token service provider). Many prefer outsourcing, because:

- Third-party providers specialize in security and compliance.

- They operate hardened vaults, key management, audits, failover, and disaster recovery.

- They absorb much of the compliance burden.

- They provide APIs and integrations.

Outsourced tokenization is a popular choice precisely because it offloads much of the risk and complexity while still being very effective in how tokenization protects customer payment data.

However, in-house tokenization gives more control but requires deep expertise, infrastructure, audit processes, and continuous security investment. It is riskier but feasible for large enterprises.

Tokenization Service API Layers

Typically, a tokenization system provides well-defined APIs for:

- Token generation / issuance — creating new tokens from raw data.

- Token query / lookup — retrieving token metadata (without detokenizing).

- Detokenization — controlled conversion of token back to real data (usually limited to trusted subsystems).

- Token lifecycle management — expiration, revocation, rotation, reissuance, device binding.

- Analytics, logging, audit — tracking all token operations.

Merchant systems integrate via secure API calls—never handling raw PANs or CVVs. This modular architecture is central to how tokenization protects customer payment data: via controlled, narrow access into the vault.

Hybrid Approaches: Format-Preserving Tokenization and Stateless Tokens

Some systems use format-preserving tokenization: tokens mirror the format of the original data (same length, characters, even partial preservation like last four digits). This allows legacy systems to accept tokens without major changes. Because the token is not reversible via cryptanalysis, the sensitive data remains protected.

Also, newer stateless tokenization approaches avoid a central token database by using cryptographic functions to generate tokens deterministically from inputs (e.g. via keyed hashing). The mapping can be derived on-the-fly, reducing storage overhead. But such designs require careful cryptographic construction to avoid reversibility, collision, or predictability.

These hybrid strategies are advanced, but they further demonstrate the flexibility in how tokenization protects customer payment data while accommodating system constraints.

Use Cases & Real-World Applications

To illustrate how tokenization protects customer payment data, let’s examine real-world use cases and scenarios where it provides strong security and operational benefits.

E-Commerce and One-Click Checkout

Online retailers often want to store a customer’s card information for future purchases or one-click checkouts. Without tokenization, storing card details would expose them to risk. With tokenization, the merchant stores only a token.

On subsequent purchases, the merchant uses that token to process payment—without requiring the customer to reenter data or exposing real card data. This yields both convenience and safety.

Even if the merchant’s systems are compromised, only tokens (not card numbers) are exposed. This is a direct demonstration of how tokenization protects customer payment data in a high-traffic e-commerce setting.

Mobile Wallets & Contactless Payments

Digital wallets such as Apple Pay, Google Pay, and Samsung Pay rely heavily on tokenization. When a user adds a card to their wallet, the wallet system requests a token from the card network.

That token is what is used in transactions—never the real PAN. Even if the user’s phone is lost or stolen, the token cannot be used elsewhere.

Contactless NFC payments, which need to be fast and secure, depend on tokenization to avoid exposure of real card details. The tokens may even be transaction-specific, further limiting misuse. This is a prime example of tokenization protecting customer payment data in mobile and contactless contexts.

Point of Sale (POS) Systems and In-Store Payments

Retailers using POS systems can adopt tokenization to ensure that card data entering the POS device is immediately converted into tokens.

Some systems pair point-to-point encryption (P2PE) with tokenization: card data is encrypted at the terminal, decrypted in a secure environment, and then tokenized before being stored. This ensures that the merchant environment never handles raw data.

Thus, even if the POS system is compromised (a common target), the attacker retrieves only tokens—not card numbers. This is another practical manifestation of how tokenization protects customer payment data in brick-and-mortar stores.

Recurring Billing and Subscription Services

For subscription-based businesses (streaming services, SaaS, utilities), storing card data is often necessary to charge customers periodically. Tokenization allows the merchant to store a token instead of card details.

When the billing date arrives, the merchant uses the token to make a charge. If the token is stolen, it cannot be reverse-engineered. This provides both convenience and security.

Through tokenization, businesses can support recurring payments while protecting customer payment data continuously over the subscription lifecycle.

Chargebacks, Refunds, and Customer Support

When customers request refunds or chargeback investigations, merchants often need to reference the original transaction. Tokens serve as references to those transactions.

If a deeper look into the original card data is needed, detokenization occurs in a tightly controlled environment. This restricts access to real card data and minimizes exposure, yet supports necessary business functions.

Even in support incidents or audits, the ability to protect customer payment data remains intact, because tokenization isolates real data to the vault only.

Challenges, Risks & Mitigations

Tokenization is powerful, but not a silver bullet. To truly understand how tokenization protects customer payment data, we must also examine its challenges and how to mitigate them.

Vault as a Single Point of Failure

While token vaults centralize control, they can become a high-value target. If a hacker breaches the vault and gains mapping, the entire system is compromised.

Thus, vault infrastructure must be hardened, segmented, encrypted, audited, and possibly distributed (with replication and failover). Frequent monitoring, intrusion detection, and token-mapping encryption are essential.

Token Reversibility & Predictability Risk

If tokens are generated weakly, or in predictable patterns, attackers might reverse-engineer or guess mappings. Secure tokenization schemes must ensure tokens are non-predictable, non-deterministic (unless carefully designed), and resistant to cryptanalysis. Always choose token generation logic guided by security experts and industry best practices.

Token Collisions or Duplication

In rare cases, two sensitive data elements may inadvertently map to the same token (a collision), undermining uniqueness or accuracy. Tokenization systems must include collision resistance checks and handle token refreshing or regeneration as needed.

Performance, Latency & Scalability

A heavy load of token generation, lookup, and detokenization requests can strain the vault. Designing for caching, local lookups, shard architecture, or distributed vaults helps maintain performance. Ensuring redundancy and fallback is key.

Regulatory and Data Residency Constraints

In some jurisdictions, storing even the mapping between token and original data may require adhering to local laws (e.g. GDPR). Tokenization systems must satisfy data residency, auditability, and regulatory compliance. Organizations may need to localize vaults in regions or apply techniques that respect local privacy laws.

Integration Complexity & Legacy Systems

Some older systems expect full PAN formats or rely on legacy merchant workflows. Integrating token-based flows may require extensive refactoring or compatibility layers (e.g. format-preserving tokens). Carefully planning integration is crucial.

Insider Threats & Access Control

Employees or insiders with access to the vault could misuse privilege. Strict role-based access control, separation of duties, multi-factor authentication, logs, audits, and least-privilege policies are essential to mitigate this risk.

By identifying these challenges and implementing mitigations, organizations strengthen how tokenization protects customer payment data in practice—not just in theory.

Best Practices & Guidelines for Secure Tokenization

To ensure tokenization truly secures payment data, organizations should follow best practices. These guidelines show precisely how tokenization protects customer payment data reliably and robustly.

Use Strong Randomized Token Generation

Tokens must be generated with cryptographically secure randomness or key-based mapping techniques. Avoid predictable sequences or derivation patterns. Token generation should be irreversible and resistant to cryptanalysis.

Segment and Isolate the Token Vault

Vault systems should be on separate, isolated infrastructure, with no direct network paths from less secure systems. Strict segmentation, firewalls, and layered defenses must protect the vault. Merchant systems should never directly contact the vault except via secure APIs.

Encrypt Token Vault Storage & Key Management

Although tokens themselves are non-sensitive, the vault often stores mappings to real card data (which must be encrypted). Key management must follow strict protocols, use hardware security modules (HSMs), rotate keys, and maintain key backup and recovery procedures.

Use Secure APIs and Strong Authentication

All communication between merchants and tokenization services should use TLS (1.2+), mutual authentication, certificate pinning, and tamper-resistant endpoints. APIs should throttle, validate inputs, and implement secure error handling.

Audit Logging and Monitoring

Every tokenization, detokenization, and API access should be logged with timestamps, source IP, user ID, and outcome. Logs should be immutable, monitored in real time, and correlated with intrusion detection systems. This ensures detection of anomalies or unauthorized access.

Token Lifecycle Management

Tokens should have defined lifespans, expiration, rotation policies, revocation capability, and reuse limits. For example, tokens used for one-time purchases should expire. Multi-use tokens should periodically refresh or migrate, reducing exposure risk.

Use Contextual and Binding Constraints

Bind tokens to specific merchants, devices, channels, time windows, or geographic constraints where possible. This makes stolen tokens useless outside their intended context, enhancing how tokenization protects customer payment data through contextual limitation.

Perform Penetration Testing and Security Reviews

Regularly test both merchant integration and the token vault itself with external auditors or security firms. Validate the irreversibility of tokens, resistance to side-channel attacks, and robustness of access controls.

Follow Industry and Standards Guidelines

Adhere to PCI DSS, EMVCo tokenization standards, and recommendations from the PCI Security Standards Council. Keep up with tokenization best practices endorsed by industry bodies.

Provide Fallbacks and Redundancy

Have backup vault nodes, failover paths, and disaster recovery plans. Ensure continuous operation even if one vault instance fails. Plan for high availability and system resilience.

By carefully applying these best practices, any organization can maximize the security of token-based systems and confidently rely on how tokenization protects customer payment data in real-world deployment.

Tokenization in the Broader Security Ecosystem

While tokenization is powerful, it must be integrated within a layered security and data protection strategy. Here’s how it fits in the bigger picture of how tokenization protects customer payment data in combination with other techniques.

Layered Security (Defense in Depth)

Tokenization should be just one layer among many: network firewalls, intrusion detection, endpoint protection, behavioral analytics, fraud detection, secure coding practices, access control, and encryption. A multi-layered defense ensures that even if one layer fails, others help protect sensitive data.

Encryption for Data in Transit

Even when sending card data to a tokenization endpoint, the transmission channel must be encrypted (e.g. TLS 1.2+). This prevents man-in-the-middle attacks. Tokenization protects data in the processing and storage layers; encryption protects it as it travels across networks. Together, they ensure end-to-end security.

Point-to-Point Encryption (P2PE) & Tokenization Synergy

In POS environments, point-to-point encryption can encrypt data from the card reader to the payment processor, and once inside the processor environment, tokenization replaces it before storage or further routing. This hybrid approach shields card data at every stage.

Integration with Fraud Detection & Analytics

While tokenization shrinks the exposure of raw data, transaction monitoring and fraud detection systems need visibility into patterns and anomalies.

Merchant systems can store metadata (timestamp, transaction amount, merchant ID) alongside the token to feed analytics without exposing card numbers. This enables intelligent fraud detection while preserving privacy.

Compliance with Privacy & Data Protection Regulations

Even with non-sensitive tokens, privacy regulations (like GDPR, CCPA) may impose restrictions on how metadata or mappings are handled.

Tokenization helps with data minimization — storing only what is necessary — which aligns with many regulatory frameworks. But token systems must also support deletion, audit, and subject access requests.

Future Technologies: Blockchain and Distributed Tokens

Emerging research explores using blockchain, federated tokenization networks, or distributed token vaults to reduce centralization risk. While still in development, these technologies may further evolve how tokenization protects customer payment data in decentralized architectures.

By integrating tokenization with other protective layers, organizations build a more resilient system that effectively shields customer payment data across all stages and interfaces.

Implementation Strategy: Steps to Adopt Tokenization in Your Business

Adopting tokenization cannot be an afterthought — it requires planning, architecture, integration, and governance. Here is a strategic roadmap to implement tokenization and understand how tokenization protects customer payment data in your specific context.

Step 1: Assess Data Flows and Scope

Start by mapping where card data enters your systems: web apps, mobile apps, POS devices, APIs, databases, logs, backups. Identify touchpoints that currently process, store, or transmit PANs, CVVs, or related fields. This helps you scope tokenization boundaries and integration points.

Step 2: Select Tokenization Model and Vendor

Decide whether to build in-house or outsource to a tokenization service provider. Evaluate vendors based on security certifications, vault design, SLA, regional presence, reliability, audit record, API features, and cost. Choose a model (single-use, multi-use, format-preserving) that suits your use cases.

Step 3: Define Tokenization Architecture

Design how the token vault integrates: its network segmentation, API interface, access control, redundancy, disaster recovery, and connectivity to merchant systems. Plan whether to use a centralized or distributed vault, and how tokens will be bound (merchant, device, time constraints).

Step 4: Integrate Front-End Systems

Modify your payment capture logic so that raw card data is sent directly to the token service (bypassing your internal systems). Ensure all internal systems only expect tokens, not real PANs. Use secure API calls, certificate-based authentication, TLS, and validation.

Step 5: Adjust Back-End Systems

Update databases, order management systems, billing engines, and reporting tools to accommodate tokens instead of card numbers. Ensure all business logic, metadata, and analytics treat tokens properly. Remove or disable any modules that previously handled raw card data.

Step 6: Implement Detokenization & Lifecycle Controls

Define who (or which subsystem) can detokenize under what circumstances (refunds, audits). Implement token expiration, rotation, and revocation logic. Build logging, audit trails, and alerting when detokenization occurs.

Step 7: Test Thoroughly & Validate Security

Conduct integration testing, penetration testing, and audits (especially of the vault). Validate that tokens cannot be reverse-engineered, that detokenization is locked, and that boundary systems never see raw card data. Test failure modes, node outages, latency, error-handling.

Step 8: Migrate Existing Card Data

If you currently store card data, migrate it into the vault and replace stored PANs with tokens. You may need a phased migration, fallbacks, or batch processing to ensure continuity.

Step 9: Monitor, Audit, and Maintain

Continuously monitor token usage, detokenization events, errors, performance, and access. Ensure compliance through audits and reviews. Periodically re-evaluate tokenization logic, cryptographic strength, and vendor SLAs.

Step 10: Educate Teams & Update Processes

Train development, operations, support, and compliance teams about tokenization. Update security policies, standard operating procedures, disaster recovery plans, incident response, and audits to include tokenization practices.

Following these steps ensures that tokenization protects customer payment data not just in design, but in fully operational, real-world systems—with security, compliance, and reliability in balance.

Future Trends & Innovations in Payment Tokenization

As threats evolve and payment systems progress, how tokenization protects customer payment data also must evolve. Here are some emerging trends and innovations:

Dynamic and Per-Transaction Tokens

Instead of reusing tokens across many transactions, more systems are adopting dynamic tokens—unique per transaction. Even if reused accidentally, they cannot be exploited beyond their immediate context. This further strengthens security and reduces replay risk.

Biometric Tokenization & Multi-Factor Tokens

Combining biometric authentication (e.g. fingerprint, face recognition) with tokenization enables a multi-factor bound token. The biometric component may be tokenized itself (biometric tokenization), adding a layer of identity-proof binding. This approach enhances assurance that the token is being used by the rightful owner.

Federated Tokenization Networks

Rather than a single provider, federated networks may allow tokens to be universally accepted across merchants or ecosystems, while still preserving isolation and security. This model supports interoperability while still protecting data.

Zero-Knowledge and Secure Multi-Party Computation (MPC)

Advanced cryptographic techniques, like zero-knowledge proofs and MPC, can allow validation of token usage without revealing the underlying card data. These methods can strengthen how tokenization protects customer payment data by reducing what any system ever “knows.”

Blockchain-based or Distributed Token Vaults

Some research explores using blockchain or distributed ledgers to decentralize token management, reduce central points of attack, and improve transparency. While still nascent, such architectures might redefine token vault models in the future.

AI-Powered Anomaly Detection in Token Ecosystems

Applying machine learning and behavioral analytics to token usage patterns can detect misuse, suspicious activity, or token misuse attempts. For example, a token used far outside its typical merchant or geography could trigger alerts.

Regulatory Evolution & Token Standards

As regulators evolve data privacy and payment rules, tokenization standards (e.g. EMVCo tokenization, regional guidelines) will continue to mature. Organizations must stay updated to ensure that tokenization protects customer payment data in compliance with evolving mandates.

These trends illustrate that tokenization is not static—it will continue advancing in sophistication and context sensitivity to stay ahead of threats.

Frequently Asked Questions (FAQs)

Below are common questions and detailed answers about how tokenization protects customer payment data:

Q1: Can a hacker use a stolen token to make purchases?

Answer: No. A token, by itself, is meaningless outside its intended context. Tokens are designed so they cannot be reversed to obtain card data. Also, they are often constrained by merchant, device, time, or geography. Even if someone steals it, they cannot use it to transact at other merchants or access card details.

Q2: Is tokenization enough by itself, or should encryption also be used?

Answer: Tokenization is powerful for protecting data at rest. But encryption is essential for securing data in transit (e.g. TLS). The optimal approach is layered: encrypt communication, then tokenize data at rest. In POS systems, use P2PE (encrypt at swipe) plus tokenization before storage. A combined approach ensures full protection.

Q3: How does tokenization reduce PCI DSS scope?

Answer: Because tokenized data is not considered sensitive cardholder data under PCI, systems storing only tokens do not need to maintain many of the stringent PCI controls. This reduces audit burden, infrastructure complexity, and risk, while still satisfying regulatory demands.

Q4: Can tokens expire or be revoked?

Answer: Yes, tokens should support lifecycle management: expiration, revocation, rotation, and reissuance. For example, single-use tokens expire immediately; multi-use tokens might renew periodically to reduce exposure risk.

Q5: What happens during detokenization or refunds?

Answer: Detokenization (mapping token back to original data) is performed only inside the secure vault, under strict policies and audit. Refunds or chargebacks initiate a vault-controlled detokenization only when required, limiting exposure.

Q6: Are format-preserving tokens safe?

Answer: Yes — when implemented correctly. Format-preserving tokenization produces tokens that follow the same structure (length, numeric format) as original data, facilitating legacy integration. The key is that tokens are still non-predictable and irreversible without access to vault logic.

Q7: Can tokenization handle recurring billing or stored card use cases?

Answer: Absolutely. Tokenization enables merchants to store a token representing card data, which can be reused for recurring payments without storing the real card. Thus, customers enjoy convenience and merchants avoid storing sensitive data directly.

Q8: What happens if the tokenization provider is compromised?

Answer: That risk must be mitigated by vault design: multiple layers of encryption, segmentation, audits, redundancy, intrusion detection, and minimal access. The vault is the crown jewel and must be defended aggressively.

Q9: How do privacy laws like GDPR interact with tokenization?

Answer: Tokenization supports data minimization: only essential data is stored, in de-identified token form. However, tokens and metadata may still be considered personal data under some laws, so systems must support subject access, deletion, and audit. Proper governance is needed.

Q10: What should a business consider before implementing tokenization?

Answer: Key considerations include mapping current data flows, selecting a stable vendor or building robust vault architecture, planning for integration, handling detokenization and lifecycle, performance design, compliance audit, and ongoing monitoring. A phased rollout and testing are advisable.

Conclusion

Protecting customer payment data is paramount in the modern digital economy. Tokenization has emerged as one of the most effective, practical, and scalable techniques to achieve this goal, without compromising performance or customer experience.

By replacing real payment data with meaningless tokens, isolating sensitive information in a secure vault, and strictly controlling detokenization, tokenization protects customer payment data at every stage—from collection through storage, processing, and reconciliation.

It dramatically reduces the risk of breach exposure and shrinks PCI DSS compliance scope, all while enabling features like recurring billing, one-click checkout, and seamless mobile wallet integration.

However, to succeed, tokenization must be architected, deployed, and operated with care: vault security, key management, token lifecycle, access control, auditing, and redundancy must all be rigorously addressed. Coupled with encryption, fraud monitoring, and layered security defenses, tokenization becomes a formidable shield.

As payment systems evolve, tokenization continues to adapt—moving toward dynamic tokens, biometric binding, federated networks, and more advanced cryptographic models. This means the principle of how tokenization protects customer payment data is not fixed but continuously improving.

If you are a merchant, payment provider, or developer seeking to adopt tokenization, start by analyzing your data flows, selecting a reliable vault or tokenization partner, planning integration carefully, and building a phased and audited rollout. Adopt strong best practices, monitor rigorously, and engage in regular security review.

With that approach, tokenization can serve not just as a security tool—but as a foundation that ensures your customers’ payment data remains shielded, your business maintains trust, and compliance burdens stay manageable.