By Annabelle King October 10, 2025

In the domain of payment processing and financial data security, the terms encryption and tokenization are often used—and sometimes misused—as if they are interchangeable.

Yet, while both encryption and tokenization aim to protect sensitive data, they operate in fundamentally different ways, and each has unique strengths, trade-offs, and applications.

Understanding the differences—and how they can complement each other—is critical for merchants, payment service providers (PSPs), financial institutions, and developers who manage or process customer cardholder data.

This article explores each concept in depth, explains real-world use cases, describes how encryption and tokenization interact, covers regulatory and compliance implications, and addresses common questions.

By the end, you’ll have a clear, up-to-date, and practical understanding of the differences between encryption and tokenization in payments.

What Is Encryption?

Encryption is a security technique in which data is transformed—through a mathematical algorithm—into an unreadable format (ciphertext), such that only those with the correct cryptographic key can convert it back (decrypt) into intelligible data.

How Encryption Works

When you perform encryption, the original plaintext (say a credit card number, name, or any confidential data) is processed using:

- An encryption algorithm (cipher). Common modern algorithms include AES (Advanced Encryption Standard), RSA, ECC (Elliptic Curve Cryptography), etc.

- A key (or keys) — symmetric (same key for encryption and decryption) or asymmetric (public key for encryption, private key for decryption).

The output is ciphertext (unintelligible gibberish) that is safe to store or transmit, because without the correct decryption key, the data remains unreadable.

For example, a credit card number 4111 1111 1111 1111 might be encrypted to something like X9#k2F7*!3sdL2Q@z. Only someone with the correct decryption key can revert that ciphertext back to 4111 1111 1111 1111.

When data is stored or transmitted in encrypted form, it reduces the risk of data breaches: even if attackers gain access to encrypted data, without the key they can’t decipher it.

Encryption is often used in two principal contexts in payments:

- In transit: As data moves from the cardholder’s browser or POS terminal to the payment server, encryption (often TLS / SSL) helps ensure it is protected.

- At rest: When storing payment data (e.g. backup, archival, logs, databases), encryption ensures that even a stolen database cannot be read unless decrypted.

However, encryption is not a complete “set-and-forget” solution. Proper key management, algorithm strength, and protocol design are essential for encryption to remain secure.

Strengths and Weaknesses of Encryption

Strengths

- Proven technology: Encryption is well studied, widely trusted, and standardized (e.g. AES, RSA).

- Reversibility: You can decrypt data when needed (with proper authorization).

- Protection in transit and storage: Encryption covers many stages of data movement and storage.

- Regulatory compliance: Many security standards and regulations mandate encryption of sensitive data.

Weaknesses / Challenges

- Key management risk: If a decryption key is compromised, encryption fails.

- Performance overhead: Encrypting and decrypting data can add computational load, especially for high transaction volumes.

- Sensitive data still at risk: Encrypted data is still considered “sensitive” under many compliance regimes, so the system remains in scope of audits.

- Complexity: Implementation errors, weak algorithms, or poor protocol design can introduce vulnerabilities (e.g. side-channel attacks).

In short: encryption protects data by obscuring it mathematically, but because data is still recoverable (with key), the system must keep the keys extremely secure.

What Is Tokenization?

Tokenization is a data protection technique where sensitive data is replaced with a non-sensitive surrogate called a token. The token has no meaningful value by itself and cannot be reversed back to the original data without access to a secure tokenization system or vault.

How Tokenization Works

The basic flow is:

- The original sensitive data (e.g. credit card Primary Account Number, or PAN) is submitted to a tokenization system.

- The system generates a token (random or pseudo-random value) that maps to the original data. The mapping is stored in a token vault or mapping database.

- The token (which is non-sensitive) is returned and used in place of the real data for storage or further processing in the merchant’s environment.

- Only a privileged, controlled system presence (the token vault) can “detokenize” the token to retrieve the original card data, and only when necessary.

Tokens are sometimes designed to maintain format (e.g. same length, digits) to ensure compatibility with legacy systems.

For example:

- Original PAN: 4000 1234 5678 9010

- Generated token: TKN_8391_47AB_56C2

With tokenization, even if an attacker obtains the token used in a merchant’s database, it is useless by itself without the token vault. The vault is typically heavily secured and separated from the merchant’s environment.

Tokenization is particularly useful in payment systems, subscription billing, digital wallets, and other contexts where cardholder data may be stored or reused. It’s a mechanism widely used in PCI / card industry contexts.

Strengths and Weaknesses of Tokenization

Strengths

- Reduced risk: Tokens contain no sensitive information, so if a merchant’s system is breached, the tokens alone don’t reveal card data.

- PCI / compliance benefits: Because tokens are non-sensitive, systems using tokenization can reduce the scope of PCI DSS controls.

- Low computational cost: No heavy encryption/decryption processing for everyday operations.

- Data compatibility: Because tokens may preserve data format, they can be used in existing systems with minimal disruption.

- Enhanced security posture: Reduced exposure of real card data in broader infrastructure.

Weaknesses / Challenges

- Vault security becomes crucial: The token vault is a prime target; compromise there is catastrophic.

- Token mapping overhead: Maintaining and scaling the mapping database is complex, especially across distributed systems.

- Loss of reversibility flexibility: Tokens are not inherently reversible (except through the vault), so operations needing the original data must access the vault.

- Scope of use: Tokenization is highly effective for stored data, but is not a replacement for encryption in transit.

- Implementation complexity: Integration of tokenization, setup of vaults, and secure token services require careful design.

Thus tokenization is not a panacea; it must be designed well and securely, just like encryption.

Key Differences Between Encryption and Tokenization (with Payment Context)

While both encryption and tokenization aim at securing sensitive data, they differ fundamentally. In payment systems, understanding those differences helps you decide which (or both) to employ.

Below are major distinguishing aspects:

Nature of Protection

- Encryption: protects by scrambling data into ciphertext. The encrypted data is still “sensitive” because if an attacker gains the key, decryption is possible.

- Tokenization: protects by substituting data with a token that has no intrinsic meaning. Even if a token is stolen, it cannot be reversed without the token vault.

Reversibility

- Encryption: is reversible (given the proper key).

- Tokenization: is irreversible (unless you have access to the token vault); by itself, you cannot derive the original data from the token.

Use Cases: Transit vs Storage

- Encryption: ideal for data in transit (e.g. over networks) and at rest, when storing sensitive data.

- Tokenization: mainly beneficial for data at rest — replacing stored sensitive data, especially in merchant systems, with tokens.

Computational Load

- Encryption: higher computational cost due to cryptographic operations.

- Tokenization: lower overhead in day-to-day operations, because tokens are simple substitutes.

Compliance and Scope

- Encryption: data remains in scope for security standards (like PCI DSS) because it’s still sensitive.

- Tokenization: may reduce the scope of PCI DSS compliance, because tokens are not considered sensitive data, thereby relieving some burden.

Key / Vault Risk

- Encryption: encryption keys are critical—if compromised, data is exposed.

- Tokenization: the token vault is critical—if compromised, all token mappings are at risk.

Flexibility in Data Format and Systems

- Encryption: often changes data length or format, which can break legacy systems expecting fixed lengths or numeric formats.

- Tokenization: can preserve format (e.g. digit count), making integration easier with existing systems.

Scalability and Performance

- Encryption: scaling may require more processing resources and careful key management across distributed systems.

- Tokenization: scalable token vault architectures can be challenging but day-to-day token lookup is efficient.

Real-World Illustrations in Payments

- A merchant stores tokenized card numbers for recurring billing. Even if their servers are breached, the tokens are useless without the vault.

- But when processing transactions across networks, data is encrypted (e.g. via TLS) so the payment details are protected in motion.

In practice, modern payment systems often combine both approaches: encryption for transport, tokenization for storage. Many payment processors and digital wallets wrap these under “end-to-end encryption + tokenization” stacks.

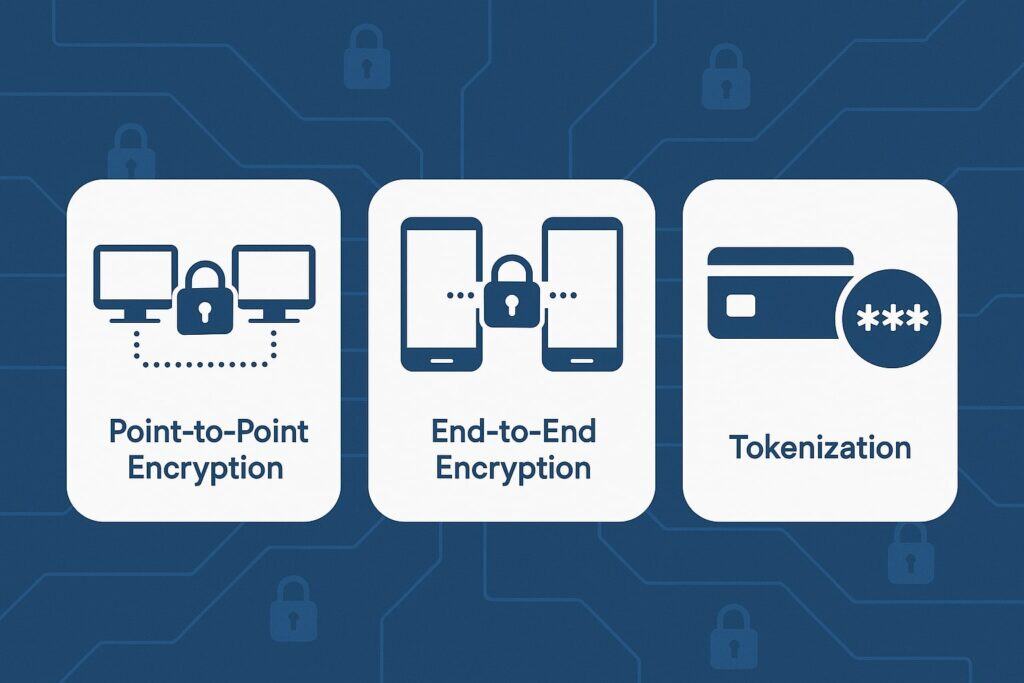

Types and Variants: Point-to-Point Encryption, End-to-End Encryption, and Token Types in Payments

To fully understand the interplay and nuances of encryption vs tokenization, it’s important to know some variants in practice—especially in payments.

Point-to-Point Encryption (P2PE) and End-to-End Encryption (E2EE)

These are encryption models designed to limit exposure of plaintext data.

- Point-to-Point Encryption (P2PE): A standard defined by the PCI Security Standards Council. In a P2PE solution, card data is encrypted at the point of interaction (e.g. a card reader) and stays encrypted until it reaches a secure decryption endpoint (typically at the payment processor). The merchant never has access to the decryption keys.

- End-to-End Encryption (E2EE): A broader concept, indicating that data remains encrypted from the origin (card reader or browser) all the way to the final endpoint. It overlaps conceptually with P2PE, but E2EE does not always guarantee PCI validation like P2PE does.

In practice, when a payment is made:

- The card data is encrypted immediately at the card terminal using a validated P2PE device.

- The encrypted data travels through networks to the processor.

- At the processor side, data is decrypted in a secure zone.

- Then the processor might generate a token and send that back to the merchant for storage.

This combination ensures minimal exposure of plaintext, and shifts the risk from the merchant to the payment processor.

Token Types in Payment Tokenization

Tokenization itself can come in different flavors:

- High-value tokens (HVTs): Surrogates of actual Primary Account Numbers (PANs) that can be used to execute transactions. They are designed so that tokens look like card numbers and can be processed in payment networks.

- Low-value tokens (LVTs) / security tokens: These are tokens that cannot be used to conduct payment transactions—they only serve as references to the real data in limited contexts.

- Single-use tokens: Tokens that are valid only for one transaction. After use, they cannot be reused.

- Multi-use tokens: Tokens valid across multiple transactions (e.g. for recurring billing).

- Format-preserving tokens: Tokens that maintain the structure (length, prefix, type) of the original data, making them more transparent to legacy systems.

- Device-bound tokens: Tokens tied to a specific device (e.g. mobile wallet) to prevent misuse elsewhere.

Understanding token types helps decide the suitable tokenization architecture for a merchant or payment system.

Use Cases: When to Use Encryption, When to Use Tokenization, and When to Combine Them

Knowing the differences is not enough—you must apply them strategically based on use cases in payments. Here are typical scenarios:

Use Encryption When…

- You need to protect data in transit (e.g. browser → server, POS terminal → network).

- Sensitive data must be stored (e.g. logs, backups) under encryption.

- You cannot rely solely on third-party services for your infrastructure.

- Regulatory or technical requirements explicitly require encryption (e.g. when data must traverse multiple systems).

- You need reversible protection so that data can be decrypted when necessary under strict control.

Use Tokenization When…

- You want to store payment credentials (e.g. for recurring billing, card-on-file) without holding the actual card data.

- You want to reduce PCI DSS or regulatory compliance burden by removing sensitive data from your systems.

- You want to mitigate risk of database breaches by substituting tokens for actual data.

- You operate a merchant or subscription business where token lookup is frequent.

Combine Encryption + Tokenization (the common pattern)

Most robust payment architectures use encryption + tokenization together. The flow typically is:

- Card data is encrypted at the entry point (using TLS/E2EE or P2PE).

- The encrypted data reaches a secure server or processor.

- The processor or secure service detokenizes (decrypts) to obtain the real PAN within a secure vault.

- A token is generated to replace the card data, and the merchant receives that token (never sees the real PAN).

- The merchant stores only the token; if refunds or lookups are needed, they pass the token back to the processor.

This layered approach ensures data is secure in transit (via encryption) and secure at rest (via tokenization). It also helps reduce PCI scope and risk exposure.

As an example, Stripe describes that encryption and tokenization “work together” in payments systems: encryption protects data in transit, tokenization removes sensitive data from merchant storage.

Another example: when using mobile wallets (Apple Pay, Google Pay), tokenization is used to store a card in the device. During a purchase, the wallet generates a token or cryptogram which is encrypted for transmission. Thus the hybrid approach is the standard in modern payments.

Architectural Considerations and Implementation Challenges

Shifting from theory to practice, implementing encryption and tokenization in real payment systems involves addressing a number of architectural, operational, and security challenges.

Key Management and Cryptography

- Key generation, storage, rotation: Cryptographic keys should be generated using secure hardware or HSMs (Hardware Security Modules). Keys must be rotated periodically to limit exposure.

- Secure storage: Keys must not reside in cleartext in application code or insecure environments.

- Access control: Only authorized systems and personnel should have permission to decrypt.

- Key hierarchy: Use master keys, intermediate keys, and usage keys to segregate duties and minimize risk exposure.

Token Vault Security and Scalability

- Vault isolation: The token vault should run in an isolated, hardened environment.

- Access controls and audit trails: Only limited systems or roles may detokenize or access original data, accompanied by audit logs.

- Backup and replication: The vault database must be reliably backed up and replicated across secure data centers, with synchronized token mapping.

- High availability and fault tolerance: Token lookups must be fast, reliable, with redundancy.

- Regional / distributed vaults: For global merchants, vaults may be distributed across regions, requiring synchronization strategies.

Performance & Latency

- Caching of token lookups: Within allowed security parameters, caching can reduce vault lookup latency.

- Load balancing: For high volume merchants, multiple token services may run behind load balancers.

- Failover strategies: Vault downtime should not cripple merchant operations—fallback modes or queuing must be available.

Integration with Legacy Systems

- Format preservation: Tokens may need to mimic original data formats to avoid breaking old systems.

- Backward compatibility: Payment networks, banking systems, and clearing systems expect data in specific formats. Having tokens that follow format helps integration.

- Interoperability: Systems that don’t support tokenization must still communicate with tokenized systems via adapters or gateway layers.

Security and Risk Assessment

- Threat modeling: Consider threat vectors like vault compromise, key theft, side-channel attacks.

- Penetration testing: Regular security tests to validate vault security and encryption implementation.

- Continuous monitoring: Detects anomalies, unauthorized detokenization attempts, or unusual access patterns.

- Zero trust principles: Segment systems so that a breach of one component doesn’t compromise all.

Compliance and Audit

- PCI DSS compliance: Merchants and service providers must demonstrate compliance with encryption and tokenization controls, key management, and securing stored data. Tokenization may reduce scope, but token vaults still must comply with relevant standards.

- Data protection / privacy laws: GDPR, CCPA, and other data privacy laws require protection of PII. Tokenization aligns well with “data minimization” by reducing storage of real data.

- Auditability and traceability: Systems must log all encryption, decryption, tokenization, and detokenization operations to satisfy auditor requirements.

Although challenging, well-designed architectures can make encryption and tokenization robust, scalable, and manageable for large payment systems.

Regulatory, Compliance, and Industry Standards Impacts

Encryption and tokenization are deeply intertwined with compliance in payment systems. Below, we examine major standards and legal implications.

PCI DSS (Payment Card Industry Data Security Standard)

PCI DSS is the primary standard governing the handling of cardholder data. Some key points:

- Encryption requirement: When cardholder data is stored, it must be protected using strong cryptography and key management.

- Tokenization and scope reduction: Because tokens are not considered real cardholder data, systems that only store tokens may fall out of PCI scope or require fewer controls.

- P2PE standard: A PCI-validated P2PE solution helps merchants reduce PCI scope and demonstrates compliance.

- Key management requirements: PCI DSS mandates proper key handling, rotation, storage in secure modules, separation of duties, and access controls.

- Validation and audits: Deploying encryption or tokenization doesn’t absolve merchants from audits; they must still prove compliance.

With PCI DSS Version 4.0, emphasis on cryptographic agility, rotating keys, and security testing is growing. Tokenization is also recognized as a mechanism to support reducing scope when properly implemented.

Data Protection and Privacy Laws (GDPR, CCPA, etc.)

- Data minimization and pseudonymization: Regulations like GDPR encourage reducing directly identifiable data. Tokenization helps by replacing real identifiers with non-sensitive tokens.

- Technical and organizational measures: Laws often require “appropriate measures” to protect personal data; encryption and tokenization are accepted mechanisms.

- Data subject rights: Individuals have rights to access, erase, or modify their data. Tokenization systems should be designed to support these operations without exposing real data.

- Cross-border data transfer: Encrypted or tokenized data may simplify compliance when transferring data across jurisdictions, but design must respect local laws.

Industry Tokenization Standards and Initiatives

- ANSI X9: Tokenization in financial cryptography is addressed in ANSI X9 standards (e.g. X9.119).

- EMVCo tokenization specification: EMVCo (which governs global card standards) has published payment tokenization guidelines.

- Card brand best practices: Visa, Mastercard, and others provide tokenization guidelines and certification processes.

- Token Service Providers (TSPs): Entities offering tokenization services often must follow strict security, audit, and compliance frameworks.

Risk, Liability, and Responsibility

- Using a third-party tokenization or encryption provider often shifts responsibility for handling raw card data and reduces a merchant’s liability in case of breach.

- But if the vault or key management fails, liability can fall on the provider.

- For PCI, validated P2PE solutions may shift fines or liability away from the merchant to solution providers under certain conditions.

In summary, encryption and tokenization are not only cryptographic choices—they are strategic for meeting compliance, reducing liability, and aligning with industry standards.

Example Workflow: A Payment Transaction End-to-End

To make all of this concrete, let’s walk through an end-to-end payment flow where encryption and tokenization are employed in tandem. This illustrates how data moves and transforms through each stage.

Step 1: Card Entry / Capture

A customer inputs their credit card in a browser (eCommerce) or swipes/taps at a terminal (POS). A secure client environment (e.g. JavaScript SDK, certified card terminal) encrypts the card data immediately (end-to-end or P2PE). The merchant never sees plaintext card information.

Step 2: Transmission to Payment Processor

The encrypted data is sent over the network to the payment processor or gateway, typically over TLS, while remaining encrypted (or layered) so intermediaries cannot read it.

Step 3: Decryption in Secure Processor Environment

In the processor’s secure infrastructure, data is decrypted to reveal the actual card details—this step happens in a tightly controlled, audited environment.

Step 4: Token Generation

Once the real card data is available, the processor or token service generates a token mapping to that card data. The mapping is stored in a secure token vault.

Step 5: Return Token to Merchant

The processor sends the token (not the card data) back to the merchant. For subsequent operations (refunds, recurring billing, analytics), the merchant references the token.

Step 6: Token Use in Later Transactions

When the merchant needs to perform an action (refund, settlement, billing), they pass the token back to the processor. The processor looks up the token via vault, retrieves (or decrypts) the associated card data, and proceeds with the operation—all transparent to the merchant.

Step 7: Auditing and Monitoring

All encryption, decryption, tokenization, and detokenization events are logged for audit. Any unusual or unauthorized attempts to access the vault or keys trigger alerts and possible revocation.

This workflow reflects real architectures used by many payment platforms and demonstrates the synergy of encryption and tokenization in practice.

Risks, Failure Modes, and Security Mitigations

Even with encryption and tokenization, mistakes or misconfiguration can expose vulnerabilities. Below is a discussion of risks and how to mitigate them.

Risk: Key Compromise or Mismanagement

- If encryption keys are stolen or misused, encrypted data can be decrypted by attackers.

- Mitigations: Use hardware security modules (HSMs); restrict access; rotate keys regularly; implement split keys and multi-party control; monitor usage; separate environments.

Risk: Vault Breach or Mapping Database Exposure

- If the token vault or mapping database is compromised, tokens can be reversed or fraudulently misused.

- Mitigations: Vault isolation; strict access control; intrusion detection; encryption of vault data; network segmentation; regular security assessments; separation of duties.

Risk: Side-Channel Attacks, Timing Leaks, or Memory Exposure

- Attackers may exploit side-channels (timing, power, memory) to glean secrets.

- Mitigations: Harden cryptographic implementations; constant-time algorithms; code audits; physical security measures.

Risk: Replay or Token Reuse in Unauthorized Contexts

- If a token is reused in unintended contexts or by malicious actors, it may be misused.

- Mitigations: Use single-use tokens or context-bound tokens; enforce token usage policies; monitor for anomalies.

Risk: Inadequate Audit Trails and Monitoring

- Without visibility, malicious detokenization or decryption may go unnoticed.

- Mitigations: Maintain comprehensive logs, alerts, anomaly detection; periodic reviews; enforce segregation of access roles.

Risk: Implementation Bugs or Protocol Flaws

- Poorly implemented cryptography or token logic (e.g. weak random generators, poor validation) can introduce vulnerabilities.

- Mitigations: Use established, audited libraries; peer reviews; formal verification where possible; ongoing security testing.

Risk: Latency or Availability Failures

- Vault downtime or slow token lookup could disrupt business operations.

- Mitigations: Redundancy, failovers, caching (where safe), queuing, distributed architecture.

Through vigilant design, continuous monitoring, and robust security practices, many of these risks can be controlled or mitigated.

Recent Trends and Future Direction

As payment systems evolve, so do encryption and tokenization methods. Below are some contemporary trends and future directions.

Cryptographic Agility & Post-Quantum Preparations

The threat of quantum computing is pushing financial systems to adopt cryptographic agility—being able to switch to quantum-resistant algorithms when needed. Encryption systems in payments must be designed for future algorithm migration.

Tokenization as a Service (TaaS) and Cloud Tokenization

Many payment platforms now offer tokenization-as-a-service, allowing merchants to outsource token vaults to secure third-party providers. This reduces burden but requires trust and auditability.

Device-level & Biometric Tokenization

Mobile wallets increasingly tie tokens to device-specific cryptographic keys or biometrics (e.g. Apple Pay’s Secure Element). This makes tokens even more secure and resistant to misuse.

Format-Preserving Encryption (FPE)

FPE allows encryption that retains the format (length, character set) of the original data, making it more compatible with legacy systems. It blurs the line between encryption and tokenization in some contexts.

Dynamic or Transactional Tokens

Tokens are becoming more dynamic: unique per transaction, per device, or constrained by context (time, location), reducing reuse attack risk.

Blockchain, Smart Contract Tokenization, and Distributed Tokens

Emerging fintech plays with tokenization in blockchain and smart contract contexts (e.g. tokenized representations of assets). While not directly payment encryption/tokenization, the theory overlaps.

Multi-layered Zero Trust Architectures

Next-gen security architectures will embed hybrid encryption and tokenization at every layer—frontend, backend, API layer—with zero trust segmentation.

These trends continuously push the boundary of what “secure payments” means—so system architects must anticipate evolutions.

FAQs (Frequently Asked Questions)

Q1: Can tokenization completely replace encryption in payment systems?

Answer: No. While tokenization is powerful for protecting data at rest (especially in merchant storage), encryption is still necessary for securing data in transit (e.g. between client and server). Moreover, tokenization systems may themselves use encryption internally (for vaults or backups). In practice, the two techniques complement each other, not replace one another.

Q2: Does using tokenization exempt me from PCI DSS compliance?

Answer: Not entirely. Using tokenization can reduce your PCI scope, because the merchant’s system may no longer directly store cardholder data. However, the token vault, token service providers, and relevant interfaces still must comply. So while tokenization helps, you still have compliance responsibilities.

Q3: Is encrypted data always safe?

Answer: Encryption is only as safe as its implementation and key management. Weak algorithms, predictable key storage, flawed protocols, side-channel attacks, or key leaks can undermine safety. Good cryptographic practices and security hygiene are essential.

Q4: What is format-preserving tokenization or format-preserving encryption?

Answer: Format-preserving techniques ensure the output (token or ciphertext) maintains the same format (length, character set) as the original data. This allows integration with legacy systems that expect certain formats (e.g. fixed-length numeric strings).

Both tokenization and encryption solutions may use format-preserving techniques. However, maintaining format sometimes weakens security slightly, so the trade-off must be considered.

Q5: How do mobile wallets (like Apple Pay / Google Pay) use tokenization?

Answer: Mobile wallets store a token (not the actual card number) on the device. During a transaction, a cryptographic value (token or dynamic cryptogram) is generated and sent to the merchant.

The merchant or processor validates the token instead of processing the real card number. This ensures that card data is not exposed to merchants or intercepted en route.

Q6: If my merchant system is breached, am I safe if I use tokenization?

Answer: If the attacker only gains access to tokenized data (and not the token vault or detokenization system), yes—the tokens themselves are meaningless.

However, if the attacker also compromises the token vault or the tokenization system, then they may recover real data. So tokenization significantly reduces risk, but is not absolute protection if all layers are breached.

Q7: What is a good strategy to adopt encryption and tokenization for my payment platform?

Answer: Start with proven, certified solutions. Use end-to-end encryption or P2PE. Offload tokenization to a trusted token service provider. Ensure strong key management and vault security practices. Monitor, audit, and test periodically. Also, design your architecture for agility, redundancy, and eventual evolution (e.g. post-quantum readiness).

Conclusion

Encryption and tokenization are cornerstone techniques in modern payment security. While they share the goal of protecting sensitive data, their mechanisms, strengths, and limitations differ significantly.

Encryption scrambles data but remains reversible with proper keys; tokenization replaces data with non-sensitive surrogates and shifts the critical risk into the token vault.

In payment systems, the optimal architecture usually employs both: encryption to protect data in transit, and tokenization to protect stored data. This layered approach offers stronger defense, better compliance posture, and reduced exposure.

However, employing them properly demands careful attention: cryptographic key management, vault architecture, secure integration, threat modeling, monitoring, and auditing are all essential. Token vault security becomes as critical (or more) than protecting merchant systems themselves.

From a compliance and future-proofing perspective, tokenization offers compelling benefits for reducing PCI DSS scope, aligning with privacy regulations, and minimizing liability. At the same time, encryption methods must evolve (e.g. preparing for quantum threats) and remain robust.

Ultimately, the right choice is not encryption versus tokenization, but encryption and tokenization—designed and implemented securely and intelligently. With the layered approach, your payment ecosystem can stay resilient, secure, and ready for evolving threats.